Cache and main memory both are inboard computer memory. The cache holds the frequently used data. On the other hand, the main memory (RAM) holds the data that is currently in execution.

Cache and main memory both are inboard computer memory. The cache holds the frequently used data. On the other hand, the main memory (RAM) holds the data that is currently in execution.

The cache is much faster and more expensive than the main memory. However, both of these computer memories are directly accessible by the processor.

Now before going ahead let us discuss what is computer memory.

What is a Computer Memory?

Memory is a hardware device used to store programs or data. Memory is further classified into two categories:

- Primary memory

- Secondary memory

Primary memory is one that can be directly accessed by the processor. So, whenever the processor demands any data, it is loaded from secondary memory to main memory. The processor then accesses the data from the main memory.

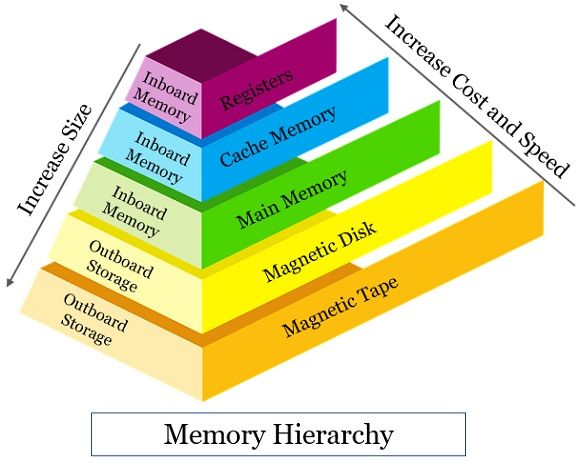

Although there are more variations of computer memory. The diagram below shows you the hierarchy of different kinds of memory.

Content: Cache Vs Main Memory

Comparison Chart

| Basis of Comparison | Cache Memory | Main Memory |

|---|---|---|

| Purpose | It is used to store frequently used data. | It holds the data that is currently being processed. |

| Access | Comparatively faster than main memory. | It is also the faster accessing memory. |

| Cost | More expensive than main memory. | Expensive memory. |

| Size | Comparatively smaller than main memory. | Larger than cache memory. |

| Types | L1, L2 and L3 | SRAM and DRAM |

What is Cache Memory?

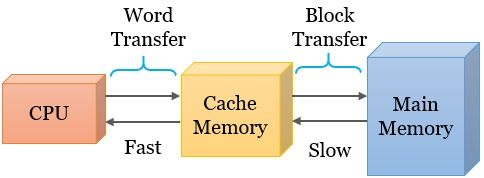

Usually, the data on which the processor is working is placed in the main memory (RAM). When the processor is processing the data it is also placed in much faster memory than the main memory. We refer this memory to as a cache.

So, whenever a processor needs a piece of data it first searches for that data in the cache. If the data is available in cache the processor directly accesses it from there. If the data is not available in the cache the processor uses the data from its source. Here the source is the main memory.

It also copies data into the cache assuming that the processor will soon require this data. Thus, we can conclude that the cache holds frequently used data. So, whenever the processor requires the data next time it can access it from the cache at a much faster rate.

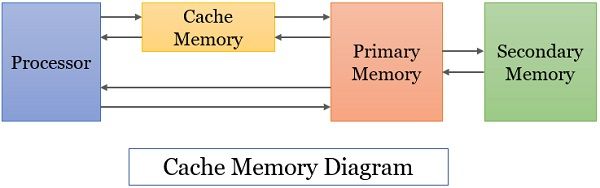

Here we can say that cache acts as a buffer between the main memory and processor. The capacity of the cache ranges from 2 KB to a few MB.

Cache Memory Diagram

Cache Mapping Techniques

It is a technique used to load data from the main memory to the cache. Consider a situation where the cache is full. At this particular moment, the processor refers to a word that is not present in the cache.

So, the cache control hardware will remove a block to create a space for a new block containing a referenced word. Well, we have three-technique to do this as discussed below:

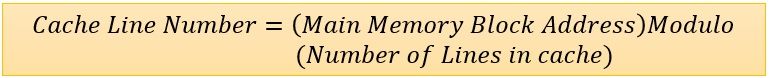

- Direct-Mapped Cache – It implies that each block of main memory could be mapped to only one possible cache line. Consider that the cache line chosen is already taken by other memory blocks. Then the cache controller removes the old memory block to empty the cache line for the new memory block.

However, there is a formula to decide, which memory block will map onto which cache line. - Associative-Mapped Cache – It implies that any main memory block can be mapped to any cache line. It is more flexible than direct mapping.

- Set-Associate-Mapped Cache – This technique is somewhere in between direct mapping and associative mapping. Here the cache lines are grouped into sets. Now a memory block can be mapped onto any of the cache lines of a specific set.

Types of Cache

We can classify cache into three types based on its closeness to the processor.

- L1 Cache – L1 cache is usually embedded in the processor. Thus, it is very much close to the processor.

- L2 Cache – L2 cache is comparatively larger and slower than L1. L2 is either implemented in the processor or on a separate chip. The processor can directly access L2 cache.

- L3 Cache – L3 is implemented to improve the performance of the above two caches. The L3 cache is present outside the multicore processor and each core of the processor can share L3 cache. It is of course slower than L1 and L2 but faster than main memory.

Advantages and Disadvantages of Cache

Advantages

- Processors can access the contents of the cache faster than the main memory.

- The cache also improves the performance of the processor.

Disadvantages

- Cache memories are of smaller size and expensive as compared to main memory.

- It is a volatile memory, as the data get vanished as soon as power gets switched off.

What is Main Memory?

The main memory holds the data or program on which the processor is currently working. The processor can directly access the content of the main memory. We refer to main memory as RAM (Random Access Memory) or primary memory.

However, the main memory consists of a large number of semiconductor storage cells. Now, these cells are not accessed individually. Instead, the processor handles these cells in groups of fixed size that we refer to as words.

The processor is able to access a word in one basic operation. Conventionally the length of a word can be 16, 32, or 64 bits.

Types of RAM

On the basis of technology, we can divide RAM into two categories that are static and dynamic RAM.

- Static RAM (SRAM)

Static RAM is volatile as its content vanishes once the power supply is off. It uses logic circuitry to store bit-by-bit data. It is comparatively faster and more expensive as compared to DRAM. - Dynamic RAM (DRAM)

DRAM is the most widely used RAM for main memory. Like SRAM, DRAM is also volatile as its content gets deleted once the power supply is off. The memory cell is made up of transistors and capacitors. These cell stores single-bit data as a charge on capacitors. The processor interprets positive charge as binary bit 1 and negative charge as binary bit 0.

Advantages and Disadvantages of Main Memory

Advantages

- A processor can access the content of the main memory faster than the secondary memory.

- Main memory consumes less power.

- Enhances the performance of the processor.

Disadvantages

- The main memory is a small size and expensive computer memory.

- Comparatively, main memory is slower than cache.

- Main memory holds data temporarily as it is a volatile memory.

Key Differences Between Cache and Main Memory

- The main purpose of a cache is to hold the frequently used data and programs. On the other hand, the main memory holds currently processed data.

- The processor is able to access both cache and main memory directly. Still, the processor can access the cache much faster than the main memory.

- The cache is more expensive than the main memory.

- The cache is comparatively smaller than the main memory.

- On the basis of nearness to the processor, we classify the cache into three types L1, L2 and L3. However, on the basis of technology, the main memory is also classified into two types static Ram and Dynamic RAM.

Conclusion

In the content above w have briefly described the difference between the cache and main memory. Both of the memories are volatile and fast. The cache holds frequently used data. Whenever the processor request any data it is first searched in the cache. If the processor finds data in the cache, the processor accesses data from the cache. Else it fetches data from the main memory and put the copy of the data in the cache in case the processor will need it sooner.

Simango Enia says

The response was so useful